Presentation

At Meesho, we value running our tech framework in the most expense productive way while accomplishing high accessibility and execution. We as of late centered around upgrading Redis for one of our utilization situations where infra costs were expanding.

We drove a 90% decrease in our Redis framework costs. Keep perusing to find out about the procedures we utilized to accomplish this result and how you can apply these expense saving strategies to your own activities!

Issue Proclamation

At Meesho, we furnish providers with the open door to grandstand notices on items, empowering them to build the perceivability of their items that could somehow slip through the cracks by clients. The essential goal of this component is to upgrade their Profit from Venture (return for capital invested). In any case, we recognize the meaning of safeguarding a smooth client experience. Finding some kind of harmony, we fostered a separating instrument that evaluates items for clients in light of their past collaborations throughout some set time span. This guarantees that clients are not immersed with redundant substance, meanwhile enabling providers to arrive at their main interest group successfully.

To effectively carry out the Channel System, we required the accompanying fundamental parts:

Increase Strategy: A technique equipped for increasing and setting the cooperations in a solitary call to limit Information/Result Tasks Each Second (IOPS) and improve effectiveness.

TTL (Time-to-Live): The capacity to establish a point in time based lapse for keys, guaranteeing that cooperation information is naturally taken out after the set time span, forestalling superfluous information development.

High Throughput and Low Reaction Time Information Source: Given the huge volume of traffic we experience, ongoing separating is essential during administration. In this manner, getting the vital information with negligible reaction time and guaranteeing high throughput support is fundamental.

To meet the predetermined necessities, Redis ended up being an optimal decision, offering every one of the essential parts. Moreover, since the put away information isn’t urgent to the mission, it tends to be put away as a reserve without the requirement for relentless capacity

Arrangements

Age – 1: Execution utilizing Redis Key Worth

In the underlying progressive phases, the accompanying calculation was developed:

A joined Redis Key comprising of Client and Item, with a Chance To-Live (TTL) set at 24 hours.

The quantity of collaborations for an item by a client is put away as the related Worth.

At the point when a collaboration occasion for an item by a client is gotten, the relating Worth is increased.

While serving, items with a worth surpassing a predefined edge are sifted.

The ensuing segment frames the memory usage in Redis utilizing the previously mentioned calculation for a solitary client:

In the above diagram X-pivot shows the quantity of items a client has cooperated with and Y-hub shows the memory utilization on Redis. From this, it is clear that as client item collaborations increment, there is an observable sharp ascent in memory use. This represents a worry, especially at scale, where the pace of memory utilization becomes significant.

In the wake of directing a top to bottom examination of the carried out calculation, we found a critical expansion in the quantity of keys in Redis with the expansion in cooperation. This happens from our decision of utilizing a joined key involving both ProductId and UserId. Subsequently, the complete number of keys in Redis approaches the result of the quantity of clients and the quantity of items. In Redis, each key causes an above of around 40 bytes because of its support inside the Redis pail. Therefore, a bigger number of keys straightforwardly means expanded memory utilization.

Presently our goal was to devise another information structure that can successfully decrease the quantity of keys in Redis while as yet meeting our functional necessities.

Age – 2: Execution utilizing Redis Hash

In our mission to devise a clever information structure pointed toward diminishing the sheer volume of keys, we anticipated breaking the connection of UserId and ProductId in the key since having just UserId or ProductId in the essential key wouldn’t expand the all out number of keys in redis with the expansion in cooperation between fixed set of items and clients subsequently decrease the memory utilization.

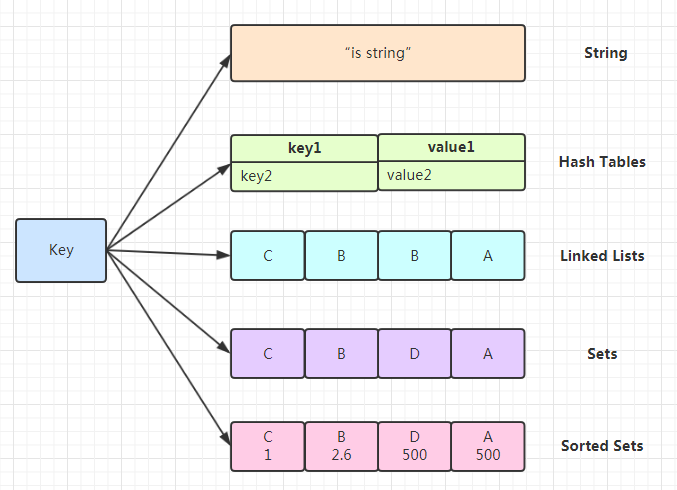

Presently redis has not many information structures where one can straightforwardly get to the singular things put away inside it like Redis Set and Redis Hash. For our utilization case we picked Redis Hash as the need might have arisen to store the planning of ProductId and Cooperation represent a mark against the essential key which is UserId. Subtleties on Redis Hash can be seen as here.

Fundamentally, Redis Hash capabilities is an information type for values put away inside Redis. It looks similar to a word reference put away as a worth, where individual keys can be straightforwardly gotten to by means of Redis orders.

We chose to make UserId as essential key and store planning of ProductId and Connection Consider esteem as a result of following difficulties.

Challenges with involving Redis Hash as information type in Redis

Challenge – 1: hash-max-ziplist-sections

Redis Hashes are inside enhanced utilizing ziplist which basically an exceptionally encoded doubly connected list that is streamlined for memory reserve funds and redis jam this design until the quantity of keys in the Redis Hash comes to or surpasses the setup boundary known as hash-max-ziplist-sections in Redis. More subtleties on internals can be found in a blog entry here.

When this limit is crossed, Redis Hash keys progress to being kept up with in a Redis pail, similar as the essential Redis keys themselves. Thus, it becomes fundamental to painstakingly change the hash-max-ziplist-passages boundary to line up with your particular prerequisites. Setting it too high can prompt elevated reaction times for Redis orders, considering that keys keep on being put away in list design for hash sizes up to the hash-max-ziplist-sections limit. We thely affect space utilization in Redis as the hash key size changes across various hash-max-ziplist-sections values.

For extra experiences on this design, kindly allude to the authority Redis documentation and a useful blog entry by Peter Bengtsson.

Following are the diagrams portraying expansion in memory against the expansion in Redis hash size for various hash-max-ziplist-sections.

As we can find in the above diagrams as the quantity of items put away in Redis hash increments past the hash-max-ziplist-passages memory utilization shoots up.

Presently with UserId as the essential key client can communicate with restricted items in a predefined time span.

We selected to hold the ‘hash-max-ziplist-passages’ boundary at its default worth of 512. This decision lines up with our information examination, which shows that 95% of clients on our foundation cooperate without any than 512 promotion items in the expected time span. Thus, any likely effect from the excess 5% of clients surpassing this cutoff is irrelevant, and it guarantees that our Redis activities keep on conveying ideal reaction times.

Challenge-2: Time-to-live(TTL)

Keys inside Redis Hash can’t have its individual TTL. TTL must be alloted for the essential Redis key and when it lapses the whole Redis hash connected as worth to this key gets eliminated. Presently this will be the issue where we need to separately control the expiry of keys inside hash and not at the essential key level as regardless of when the new thing was included the Redis Hash all put away things in hash will get expelled when essential key terminates consequently accurately picking the essential key is significant.

With UserId as the essential key we had the option to control the expiry of every one of their cooperations after the given time span going against the norm with productId as essential key we wouldn’t have the option to control the expiry at Client level.

Execution Approach

Considering the difficulties referenced above, we have executed the accompanying calculation:

Redis Key currently compares to the UserId, set with a TTL (Time-To-Live) until indicated time span.

The worth related with this Redis Key is a Redis Hash, wherein the item id fills in as the hash key, and its worth addresses the communications on the item.

Whenever a client connects with a specific item, the separate Redis Hash esteem is increased.

During item recovery, we sift through items with values outperforming a predefined limit.

Redis Composition for this calculation:

Redis Lua Content to Addition Hash Field and Set Expiry

We selected Lua Content to augment hash fields and set expiry on the grounds that, any other way, we would have to settle on two separate decisions to Redis for every collaboration: one for increasing and one more for setting the expiry of the essential key. Nonetheless, utilizing a huge Lua script in Redis presents a test; when the content contains various orders, it can influence the exhibition of your application’s Redis, making different orders be ended until the Lua script is executed. To alleviate this, we planned our content to incorporate just two orders, forestalling such execution issues.

In the above Lua script, we utilize the Redis hincrby order to increase a predefined field inside a hash information structure. If the augmented worth becomes 1 (showing that the field was beforehand non-existent), we set an expiry utilizing the terminate order on the hash key to control its lifetime in Redis.

Arranging RedisScript in Spring Boot

In your Spring Boot application, make a design class to stack and arrange the Lua script as a RedisScript bean.

Lua Content Execution

End

At the point when we carried out the Last Methodology with no adjustment in information volume, our Redis absolute size utilization diminished essentially, going from 1 terabyte to under 100 gigabytes. This brought about a striking 90% expense decrease on Redis. This represents the significant space enhancement attainable while involving Redis Hash in the proper setting.